Pramāṇa NLP

(NB! While I want most posts in this blog to be relatively accessible, this one is rather technical. It is mainly for those more advanced readers who are interested in pramāṇa studies and/or those who are already thinking about issues surrounding digitization of pre-modern text.)

By way of background: Pramāṇa is a key concept in Indian epistemology, understood as a foundational means of secure knowledge, the most important subtypes of which are direct perception (pratyakṣa), inferential reasoning (anumāna), and linguistic testimony (śabda). Different schools of thought differed on the details, but basically all epistemologists writing in Sanskrit were deeply entrenched in the idea — even if they were trying to undermine it — and nearly everyone believed that correct knowledge concerning the self (and what's not the self) is the main driver of spiritual liberation.

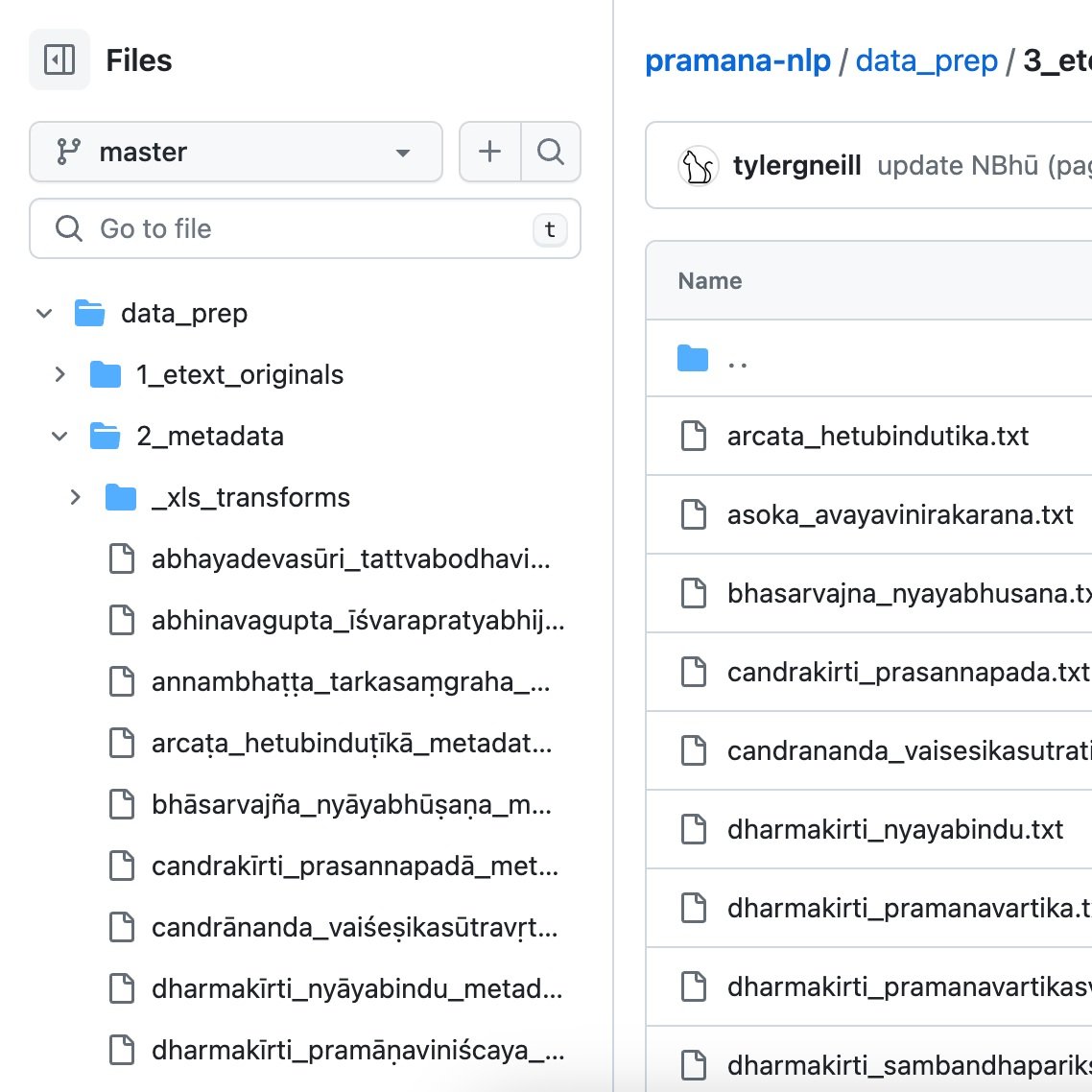

The Pramāṇa NLP project grew out of the need to extract research insights from a large and formidable corpus of such epistemological works. The idea — in fact the initial list for which texts to include — came from the Nyāyabhāṣya Digital Critical Edition project team (including Philipp Maas, although the DFG page doesn’t mention him now), and I took it and ran as part of my dissertation work. Due to my own focus on a single work (the 10th century Nyāyabhūṣaṇa), the corpus includes only texts of those works (mostly Nyāya and Buddhist from the first millenium) which are most relevant for it. These 60-odd texts were sourced from various other corpora (GRETIL, SARIT, etc.), restructured when needed, and cleaned (with regular expressions and, regrettably, often lots of man-hours). The main use of the corpus so far has been to serve as the basis for the Vātāyana system (see description on Projects page), but it is open source and designed for any number of downstream NLP uses, hence the name.

When I say that it is designed for NLP, I mean the following things:

1. Clear division between structure and content: The words that these authors wrote (i.e., the content) and all the other stuff of modern edited books (i.e., the structure, including page numbers, an editor’s chapter headings and notes, etc.) are easily distinguished through the use of markup. XML definitely would have also worked for this, but I wanted the raw files to be more human readable in the short term, and XML can always happen later, so I opted for a simple scheme based on brackets, explained here.

2. Removable structure: All structural elements can be programmatically removed, and separately so (e.g., it's possible to leave page numbers and headers but remove text-critical notes about variant readings). Given the markup scheme I chose, simple regexes are enough for this, no XSLT required.

3. Clean and continuous natural-language content: What remains when structural bits are removed looks like real and modern language, with no spurious whitespace corrupting individual words (unfortunately a frequent problem with digitized Sanskrit!) but still with useful punctuation separating the text into meaningful units, including sentences and, perhaps even more importantly, paragraphs wherever possible.

4. Fine-grained identifiers: Any given portion of text is associated with an identifier that describes at most a paragraph or so of material. This makes it possible to point a user to a relatively small piece of the corpus in a less disorienting way, which is useful both for making hyperlinks and for delivering search results.

5. Quality and quantity: For any given work represented in the corpus, I usually chose from among several possible sources, and I made the extra effort to secure the best-quality option possible, including when that involved e.g. painstakingly extracting it from the SARIT database (e.g., Jayantabhaṭṭa’s Nyāyamañjarī) or digitizing it myself from scratch (e.g. Vyomaśiva’s Vyomavatī). Sometimes, it meant biting the bullet and excluding an important work due to serious problems with existing versions and insufficient time to produce my own (e.g., Vasubandhu’s Abhidharmakośabhāṣya). I also spent several years on the effort, trying to cover a respectable portion of the works relevant to my subject of study (Nyāyabhūṣaṇa). The resulting size of the corpus, after eliminating much processed material that did not meet the final quality check, is over two million words; for scale, the Digital Corpus of Sanskrit is around five million words.

Taken together, these features are what I think make the corpus fairly unique in the Sanskrit world.

To finish this post, I have three more reflections.

1. When I reflect on the ideals of the project, it seems clear to me that I was strongly influenced by a digital humanities project called Text-Fabric, by Dirk Roorda. During a 2017 workshop held in the inspiring glass-walled classroom at the top of the Leipzig Paulinum tower (pictured above), he presented a radical view of text as markup, which basically meant thinking of the text as a list of words or characters and considering the indices of the list to be primary and the text as secondary. Within a Jupyter Notebook (which I hadn’t really seen before), he had the entire Hebrew bible at his fingertips and me on the edge of my seat. It really seemed like the textual data was one continuous manifold, with content and annotation sitting comfortably side-by-side, a hyper-dimensional stream of concentrated information. I’m not sure to what extent this project is still in use, but a certain ethos of purity, elegance, and accessibility has stuck with me, guiding my own vision.

2. A clarification: My goal was not and is not to fix all textual problems I find while digitizing. Granted, I did let myself do some of that — too much, to tell the truth. Ideally, this work requires at least some rigorous philological training (some of which I do have) AND an enormous amount of (lovely, tedious, meritorious) work carried out in conversation with the community of other skilled philologists, which requires far more time than any individual or even team of full-time individuals has for a project of this scale. At some point, we have to settle for taking the best that philology has produced so far, doing our corpus linguistics on that, and thinking about robust ways to feed back into more and better philology, without getting bogged down ourselves.

3. Finally, on putting yourself out of business: Pramāṇa NLP shouldn’t need to exist as a repository separate from the others that are more well-known. This is why I said “regrettably” above about the hours spent in text cleaning, because insofar as I have produced an updated version of a text through my laborious cleaning process, those updates — which may well be useful, but which have not been peer-reviewed in any scientifically robust way — are now on a separate branch of development that can easily cause confusion and/or fall into obscurity. But all is not lost, because reconciliation is possible. These improvements can be fed back into source repositories. This does take a good deal of effort. Also, it might take some compromise, if not all my changes are agreed to be proper. In the next phase of development, I’d like to make sure that each text in the Pramāṇa NLP corpus can return to or join an established online repository in an improved form.

4. To sum up, if there is a single new data concept which is responsible for the creation of Pramāṇa NLP and which I hope the project can help others understand, it is this: that it should be possible to transform a repository document into a document ready for use with NLP methods with entirely automatic means. In other words, I should be able to write a data ingestion pipeline that downloads Sanskrit corpus data from well-known repositories on the internet, runs preprocessing scripts, and can be so rerun whenever the source has been updated and/or the preprocessing code has changed. At present, this is far from being true in most (but not all) cases. But it is important, and it is the message Pramāṇa NLP is meant to convey, namely through the example of the Vātāyana project built on top of it. Only after making more progress on this central mission do I think Pramāṇa NLP should be expanded to include other philosophical works (many remain!), to say nothing of extending its approach (hopefully someday!) to other genres.